Today there are two kinds of user-facing software products: products that use machine learning and automation to adapt to and help realize users’ intentions, and products that are friction-ridden, requiring carefully memorized and repetitive interactions. Google Search, Siri, and Spotify are in the former category of products. Today’s Security Operations Center (SOC) platforms are in the latter, non-adaptive, friction-ridden category.

In the next five years, this will change. Successful security products will become as savvy as Google and Facebook in recommending relevant security information, and as precise as Alexa and Siri in anticipating the intent behind security-oriented natural-language requests. They will also combine artificial intelligence technologies with the kinds of system integrations smart-home ecosystems have achieved, updating security policies just as smart homes turn on security cameras and lock doors at user request.

This new “AI-assisted SOC” will feel as dramatically superior to today’s SOCs as today’s Google search feels compared to 1990s-era Altavista. With AI enhancement distilling the wisdom of a global “crowd” of SOC analysts into a kind of co-pilot for security workflows, auto-completing SOC analyst workflows and anticipating SOC analyst intent, security personnel will be dramatically more effective.

Of course, this change will not emerge from a vacuum; it will be the result of the confluence of multiple technology trends occurring today. The first of these is the increasing integration of all relevant security data across entire customer bases by extended detection and response (XDR) vendors providing for the first time the necessary training data for the future AI-assisted SOC’s supporting machine learning models. The second trend is the AI innovation occurring across tech, in which the research community continues to produce better machine learning (ML) algorithms, tools, and cloud AI infrastructure, providing opportunities for the AI-assisted SOC’s ML capabilities.

The third trend is programmable security posture, in which IT, cloud, and security products increasingly expose robust management APIs. As more of the IT landscape becomes controllable via API, opportunities will continue to emerge for AI-assisted SOC to provide security orchestration, automation, and response (SOAR) capabilities that behave like smart home ecosystems, updating organizations’ security postures and remediating incidents via push-button automation.

How developments in XDR, AI innovation, and programmable security posture will come together to produce the AI-assisted SOC, and what the AI-assisted SOC of the future will look like, is the subject of this whitepaper. We’ve divided the discussion into three sections:

- A vision of the AI-assisted SOC. In this section, we paint a picture of the SOC of the future, walking through concept mock-ups and hypothetical features that will characterize the AI-assisted SOC.

- Sophos’ AI-assisted SOC roadmap. In this section we describe our plan to deliver the AI-assisted SOC over the next 5 years. We discuss our AI innovation roadmap and our plans to leverage data from Sophos’ XDR product to drive AI model accuracy. We also discuss the ways we plan to leverage vendor product APIs, and the “Everything as Code” trend (e.g., Infrastructure as Code, IT as Code) to automate AI-formulated security posture updates and incident response actions.

- Sophos’ current work towards achieving the AI-assisted SOC. In this section we show the results of three research prototypes built at Sophos, demonstrating that the hard research challenges implicit in our vision are likely to be solvable in a five-year time frame.

A vision of the AI-assisted SOC

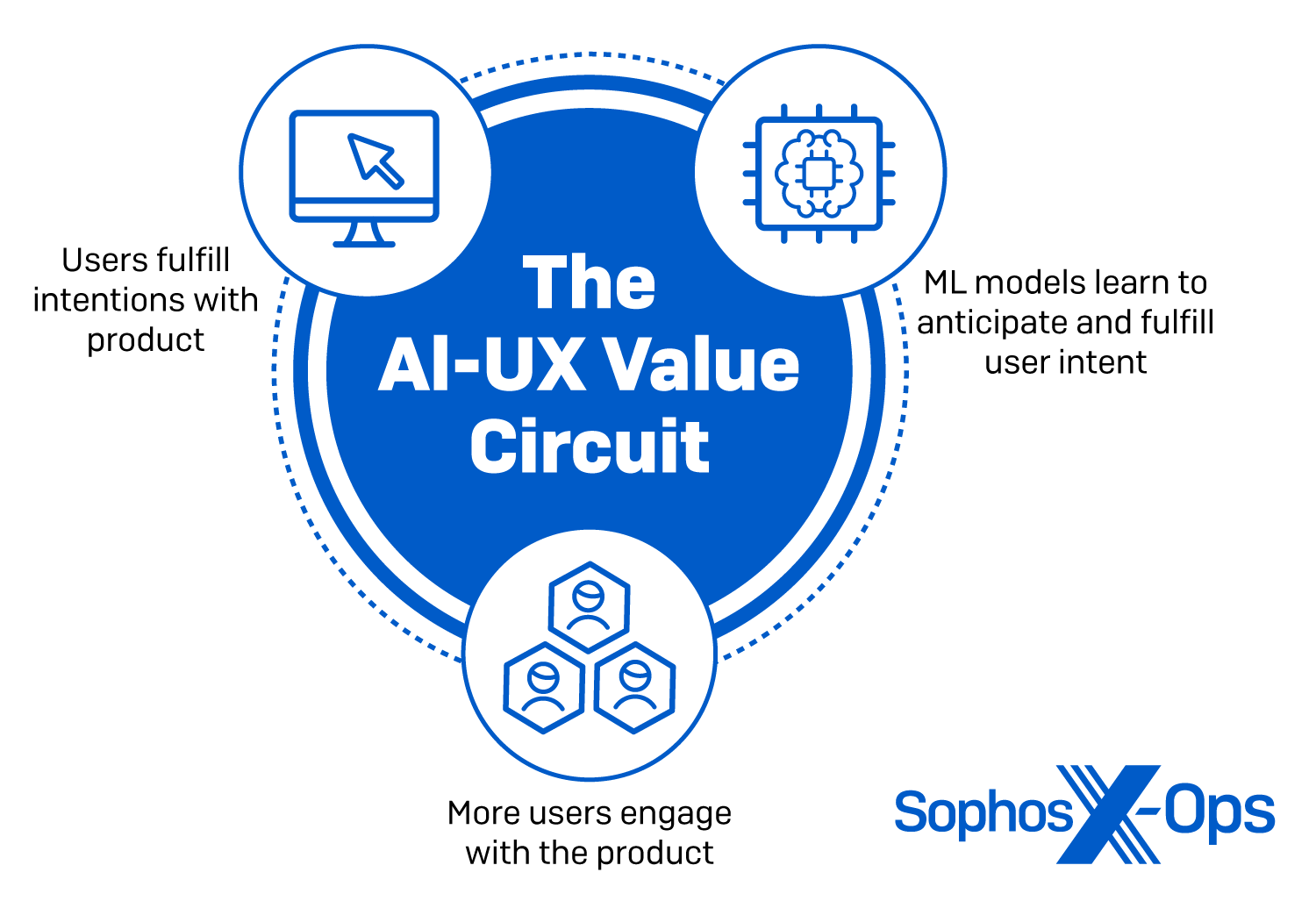

Figure 1: The AI-UX value circuit

The AI-assisted SOC will be powered by a design pattern that’s come to pervade UX work outside security: the AI-UX value circuit, shown in Figure 1. The simplest example of this is in the auto-suggest feature provided by touchscreen keyboards, email clients, and search engines. Here, as users type, AI systems suggest text “completions” and correct errors. These AI systems, in turn, learn from users. This circuit is immensely powerful: Imagine touchscreen typing without auto-complete and error correction. And the AI-UX value circuit is ubiquitous; it’s used in music, movie, and product recommendation systems; in search results ranking; semi-autonomous driving, and many other contexts.

In the next five years, successful SOC software providers will instantiate the AI-UX value circuit in security, creating a set of features that function as a kind of recommendation engine for security driven by the wisdom of the global SOC analyst crowd. By fusing this AI technology with API integrations with IT products, and by using it to modify code that defines cloud infrastructure topologies and configurations, SOC software will feel like smart home software, updating IT security posture by enacting recommended playbooks with the push of a button.

Below we give specifics on how the AI-UX value circuit will impact alert recommendation, security data enrichment, and incident remediation, before discussing enterprise IT and IT security API integration.

AI-assisted alert recommendation

Today’s detection systems are as limited as keyword-based web search engines of the 1990s because they do not contain an AI-UX value circuit. When users across multiple SOCs choose to escalate or dismiss a class of alerts, SOC platforms do not detect this temporal trend and up-rank or down-rank the relevant alerts, just as first-generation search engines did not learn from user interaction.

By ignoring the behavior of the SOC analyst ‘crowd,’ today’s SOC platforms squander the user interaction data that other tech sectors already take robust advantage of. By leveraging the AI-UX value circuit, the AI-assisted SOC will dramatically improve, recommending alerts based on information derived from real-time crowd behavior, customized to individual organizational environments. This will be not unlike the way Google currently ranks search results based on the real-time evolution of current events and crowd behavior, customizing search results based on user profiles.

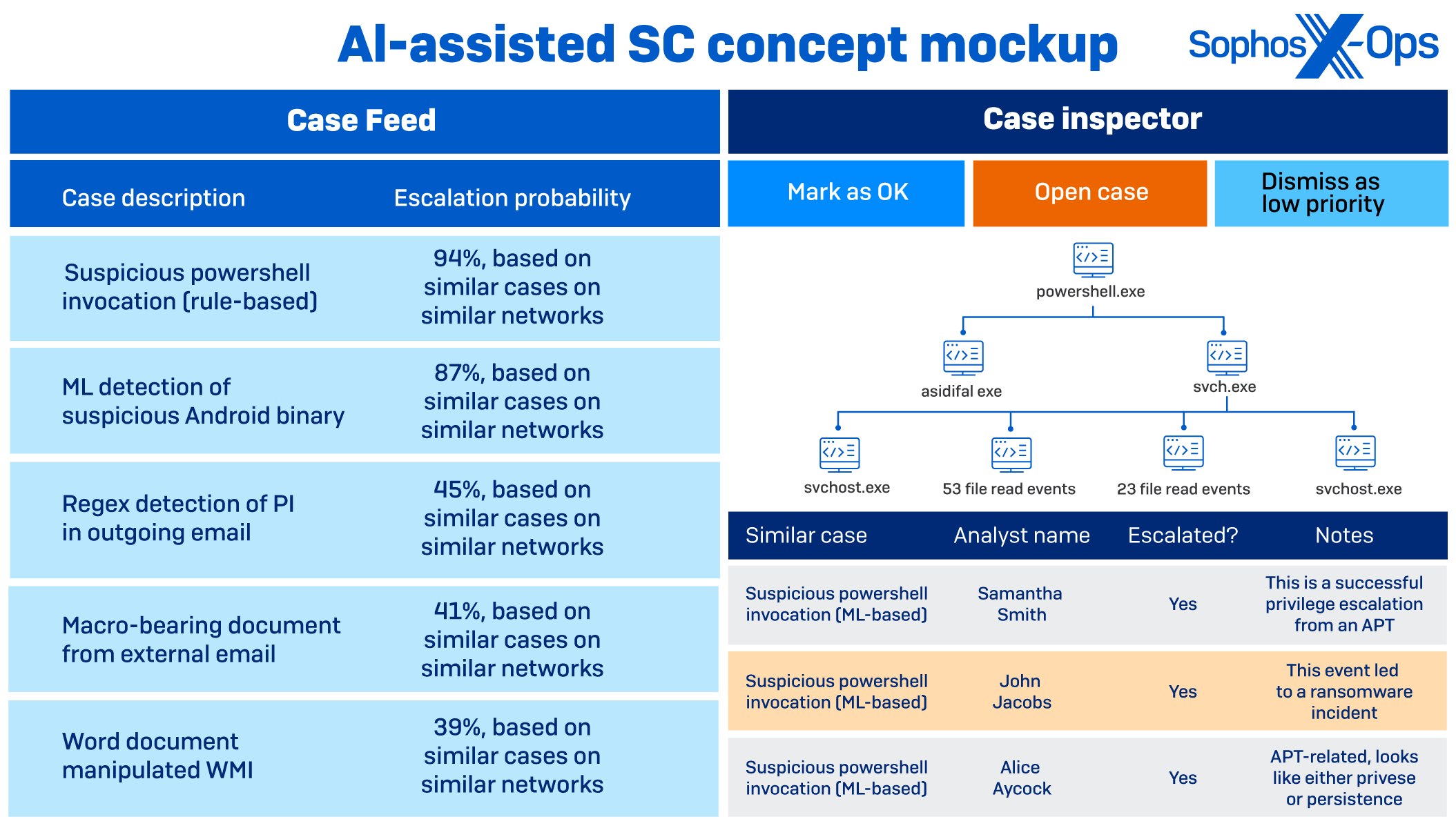

To illustrate this, a future SOC alert recommendation system is imagined in Figure 2. The panel on the figure shows how AI-enabled SOCs will order alerts emitted by arbitrary security detectors based on analyst/alert interactions across thousands of security operations centers. For example, the mock-up shows how the selected alert (in yellow), labeled “Suspicious PowerShell invocation (ML-based)”, was deemed high-priority because similar cases on similar networks were escalated by analysts in other SOCs.

Relatedly, the panel on the right of Figure 2 shows how SOC analyst “crowd knowledge” could be overlaid on top of the lower-level details of alerts of interest. Here, we see that an analyst who may work at a different customer SOC, but whose organization has chosen to share threat-related information, has noted that an alert similar to the focal alert “led to a ransomware incident.” Because our envisioned crowdsourced AI approach will combine the knowledge and experience of tens of thousands of analysts in its real-time decision-making, it will provide indispensable help in scenarios like these.

Figure 2: A concept mockup for an AI-assisted alert triage system

AI-assisted security data enrichment

AI-assisted SOCs will go beyond alert recommendation; they will anticipate what data analysts will need to follow up on alerts and retrieve it proactively. They will also automate “low-hanging fruit” reverse engineering and data analysis tasks.

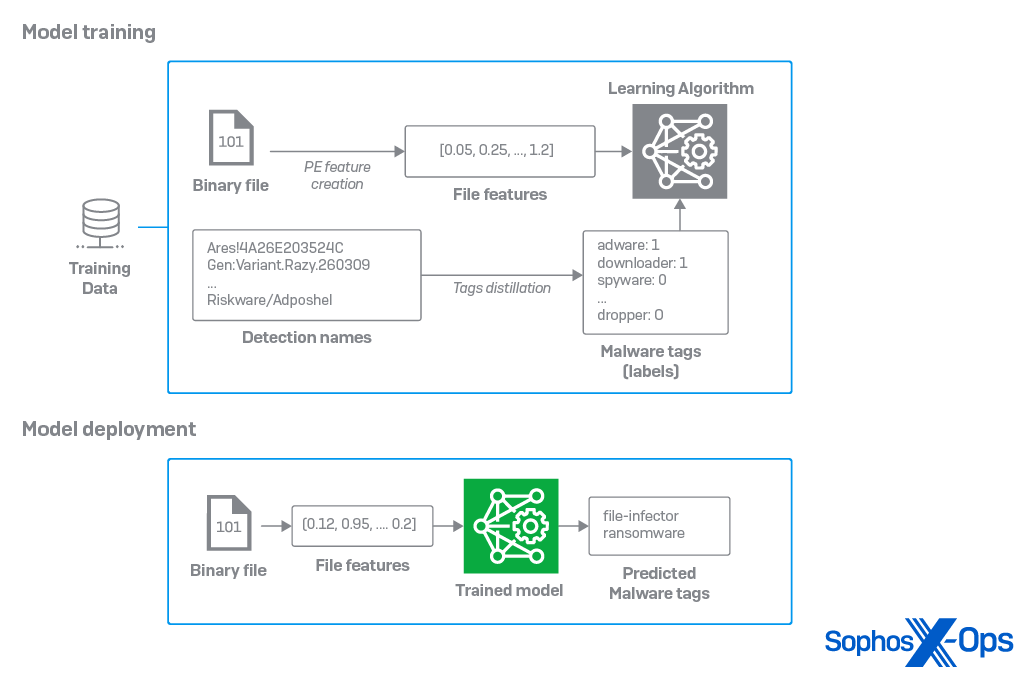

Figure 3: An AI malware-description system designed by the Sophos AI team

The malware description generation ML model shown in Figure 3 gives an example of AI-assisted automation of low-hanging-fruit reverse engineering. This technology, developed by a team at Sophos, automatically describes malware functionality based on its low-level binary features, enhancing analyst decision making. The model is trained on millions of malware samples paired with malware descriptions contributed by the entire ecosystem of antivirus vendors. In the future, such systems will be trained on textual malware descriptions given by actual analyst reverse engineers.

We give more examples of enrichments in the table below. All of these would dramatically accelerate SOC reverse engineering and threat intelligence, and would also alert follow-up workflows.

| AI data enrichment application | Use cases for application |

|---|---|

| AI security artifact enrichment | Explain the malicious intent of arcane suspicious scripts to non-specialist analysts in small SOCs |

| AI artifact-to-actor mapping enrichment | Provide analysts with attribution and intent for incidents (linking them to a threat actor group) so they can better choose a response strategy |

| AI alert explanation | Give analysts a head start in following up on high-priority alerts based on “crowd knowledge” about similar alerts |

| AI workflow pre-fetching | Proactively perform time-saving work to retrieve and provide supporting data and helpful context to an analyst based on previously observed workflows for similar detections and alerts |

Table 1: Examples of use cases for various AI-assisted data enrichments

AI-assisted incident remediation recommendation

The AI-assisted SOC will recommend crowdsourced recipes for addressing security alerts, incidents, and posture updates as analysts execute their workflows. In Table 2, we give a schema for how this capability will emerge, and we have shown a concept mock-up for this feature in Figure 2 (above). The figure shows how by taking analyst notes on past incidents and propagating them to new, relevant incidents, we can arm analysts with a crowd “co-pilot.”

It would be hard to overstate the impact this capability will have. As the size of the SOC analyst user “crowd” grows, and information is aggregated at an increasing rate, AI-assisted incident remediation recommendations could become as indispensable to SOC analysts as StackOverflow is to programmers. Feedback mechanisms built natively into SOC workflow will continuously recalibrate recipe recommendations based on the preferences demonstrated by analysts.

We imagine AI-assisted incident remediation evolving in stages. In the first phase, textual incident comments will be recommended, as shown in Table 2. In the next phase, we foresee XDR tools automatically distilling course-of-action summaries based on analyst activity so that analysts don’t have to write up after-action reports manually. This will increase the breadth of experience encoded in the “security recipe recommendation system,” reduce the labor invested in writing reports, and increase their accuracy.

In the final stage of course-of-action recommendation development, AI systems will automatically translate previously exercised courses of action to new scenarios and operating contexts, allowing for “push-button” automation of these recommended courses of action via security and IT product APIs. Our perspective is summarized in Table 2, below.

| ML incident remediation maturity stage | Capability description |

|---|---|

| Remediation recipe recommendation | As crowd analysts handle incidents and write reports about their actions, AI recommends these reports to other analysts handling similar incidents, creating a flywheel for accumulating a real-time Wikipedia of incident-response knowledge |

| Remediation recipe generation and recommendation | As crowd analysts take incident response actions, XDR software records these actions, and then recommends considering taking them in new, relevant contexts, increasing the throughput of the crowdsourced incident response knowledge base. This same process can be used to improve the generation of documentation, which is frequently either underperformed or overly time consuming |

| Push-button execution of automatically generated remediations | As crowd analysts take remedial action, XDR learns action templates and offers to automatically apply them in new, relevant contexts, decreasing incident response time as the system accumulates knowledge |

Table 2: How machine learning incident remediation recommendation will mature

AI-assisted automation of security workflows

Figure 4: The growing importance of APIs across the tech landscape (https://blogs.informatica.com/2020/02/28/how-to-win-with-apis-part-3/)

The AI-assisted SOC will not only infer user intent; it will also automate previously laborious actions, just as smart home systems automate a wide variety of previously manually performed actions. Smart home ecosystems automate instantaneous actions (“turn on the downstairs lights”), scheduled actions (“turn the lights on every evening at 6:00PM”), and complex, conditional actions (“open the garage door every day when I’m a quarter mile from home”). Behind the scenes, these ecosystems translate ambiguous natural signals (spoken natural-language input) to deterministic API requests addressing products from multiple vendors.

Analogously, AI-assisted SOC software will enable fluid natural-language control over diverse IT infrastructure spanning multiple vendors (accommodating commands such as “set firewall rules to restrict ‘ssh’ access in my AWS infrastructure to only in-office IP addresses starting next Monday at 8:00AM”). Additionally, and as discussed above, AI-assisted SOC software will often recommend courses of action to analysts, which it will then implement with their confirmation (for example, recommending that HTTP servers filter incoming connections from addresses in a given IP range, based on threat intelligence data, and then implementing this policy automatically upon analyst confirmation).

These automation features will rely on the rapid and accelerating implementation of management APIs across the enterprise IT and IT security landscape. Figure 4 dramatizes this, showing the growth in API consumption across the tech landscape over the past few years. Charts like these suggest that over the next five years, APIs in IT and IT security will become so ubiquitous that very little won’t be possible to achieve through automated, programmatic means with respect to gathering data, updating security posture, and taking remedial actions in an IT security context.

Sophos’ roadmap for achieving the AI-assisted SOC

Table 3 gives our roadmap for delivering our vision of the AI-assisted SOC during the period 2021-25; note that we are in the second year of this five-year plan. The top row of the chart gives the headline capabilities we will, or already, deliver. The next rows give the enablement deliverables required to support them, along the three technical tracks: XDR platform innovation, security AI innovation, and programmable security posture. A few points are especially salient in the roadmap and are worth highlighting.

| Capability | 2021 | 2022 | 2023 | 2024 | 2025 |

|---|---|---|---|---|---|

| AI-driven SOC feature | AI alert recommendation engine based on crowd training data – done | AI-recommended, crowdsourced incident response reports; AI alert enrichment | AI-recommended incident response action suggestions automatically generated based on analyst behavior | Push-button implementation of AI-suggested actions via Saas/IaaS APIs | Automation of routine SOC work by AI-suggested actions via AI models and SaaS/IaaS APIs |

| XDR platform development | AI / analyst feedback loop for AI alert recommendations – done | AI / analyst feedback loop for incident response playbook recommendation | Instrumentation of analyst actions to feed ML incident response models | Integration with SaaS/IaaS and security product APIs to support pushbutton AI-recommended actions | Further integration with third-party APIs to support semi-autonomous security posture and incident response actions |

| Security AI innovation | ML models for AI / analyst alert recommendation feedback loop – done | ML models for incident response recipe recommendation | ML models for auto-generation of incident-response recipes | ML models for auto-suggesting incident response actions | ML models for automating rote ML security work |

| API-based automated response innovation | Tracking of user-driven security posture update API requests to support ML auto-action models | Auto-translation of ML model suggestions to network security posture API requests | Implementation of semi-autonomous security posture actions via security posture API requests |

Table 3: Sophos’ five-year plan for achieving the AI-assisted SOC

First, delivering the AI-assisted SOC will require substantial innovation along multiple fronts, but won’t require research miracles. Indeed, there are mature existence proofs outside of security for every technology that we are developing. Security alert recommendation, as we have discussed, is analogous to the kinds of ranking problems solved by existing media recommendation systems (e.g., Spotify) and product recommendation systems (e.g., Amazon).

Incident response report recommendation, which will help analysts leverage organizational knowledge when responding to new incidents, is analogous to question answering functionality supported by personal voice assistants like Siri, Alexa, and Google Assistant, which pull crowdsourced snippets of text from the web to answer questions (e.g., “what are common symptoms of strep throat?”). Automatic incident response action suggestions are analogous to modern AI-assisted help dialogues that have recently appeared in Microsoft Office. The major work captured in our roadmap, then, is around transposing and adapting technologies and ideas from these adjacent disciplines into the security domain. No small task, but not an insurmountable one.

The second salient point in our roadmap is that by 2025, the affordances of the modern SOC will have fundamentally transformed. Cutting-edge SOCs will leverage real-time feeds of data from the behavior of the SOC analyst “crowd,” distilled by AI models, to dramatically accelerate their workflows. Indeed, the AI-assisted SOC will arm SOC analysts with a kind of “auto-complete” for their workflows, coupled with the predictive surfacing of relevant information, and this will transform security workflows.

A final point is that from a science and engineering perspective, our roadmap represents a big bet on the possibility of transformational, AI-assisted change within the security industry, and as such, will require a change in habits of mind and a questioning of long-held assumptions within our industry. It will require trial and error and getting comfortable with the uncertainty that comes with trying out new approaches over existing ones. We are committed to this journey and believe that our industry must adapt if we are to succeed in breaking through longstanding barriers to better defensive cybersecurity.

Sophos’ AI-assisted SOC research and development efforts

Is the AI-assisted SOC really within reach, or will it continue to be “just five years away?” In this section, we show that our vision is tractable, by describing current research by Sophos AI, Sophos’ dedicated security machine learning and artificial intelligence research team. We describe our work in three areas: ML alert recommendation, ML security data enrichment, and AI-UX value circuit-based behavioral detection. Table 4 summarizes the purpose and maturity of each of Sophos’ research threads, and how each contributes to our overall vision for the AI-assisted SOC.

| Research thread | Purpose | Maturity | Contribution to overall AI-assisted SOC vision |

|---|---|---|---|

| ML alert recommendation | Prioritize high-value alerts to SOC analysts based on analyst-crowd feedback | Currently in “beta” use within Sophos’ managed SOC service | Realizes the AI-UX value circuit with respect to multi-product alerts |

| ML security data enrichment | Leverage crowd data to provide analysts with decision-relevant information | Multiple mature prototypes, some of which are in Sophos’ products | Realizes first steps toward anticipating data needed by SOC analysts |

| AI-UX value circuit-based living-off-the-land detection | Create a feedback loop between analysts and ML-based detectors to iteratively improve living-off-the-land detection accuracy | Currently in early prototype and under development within the Sophos AI team | By grouping similar low-level events, we’ll make analyst note recommendation possible |

Table 4: Three Sophos research threads operating in support of the AI-assisted SOC roadmap

Machine learning alert recommendation

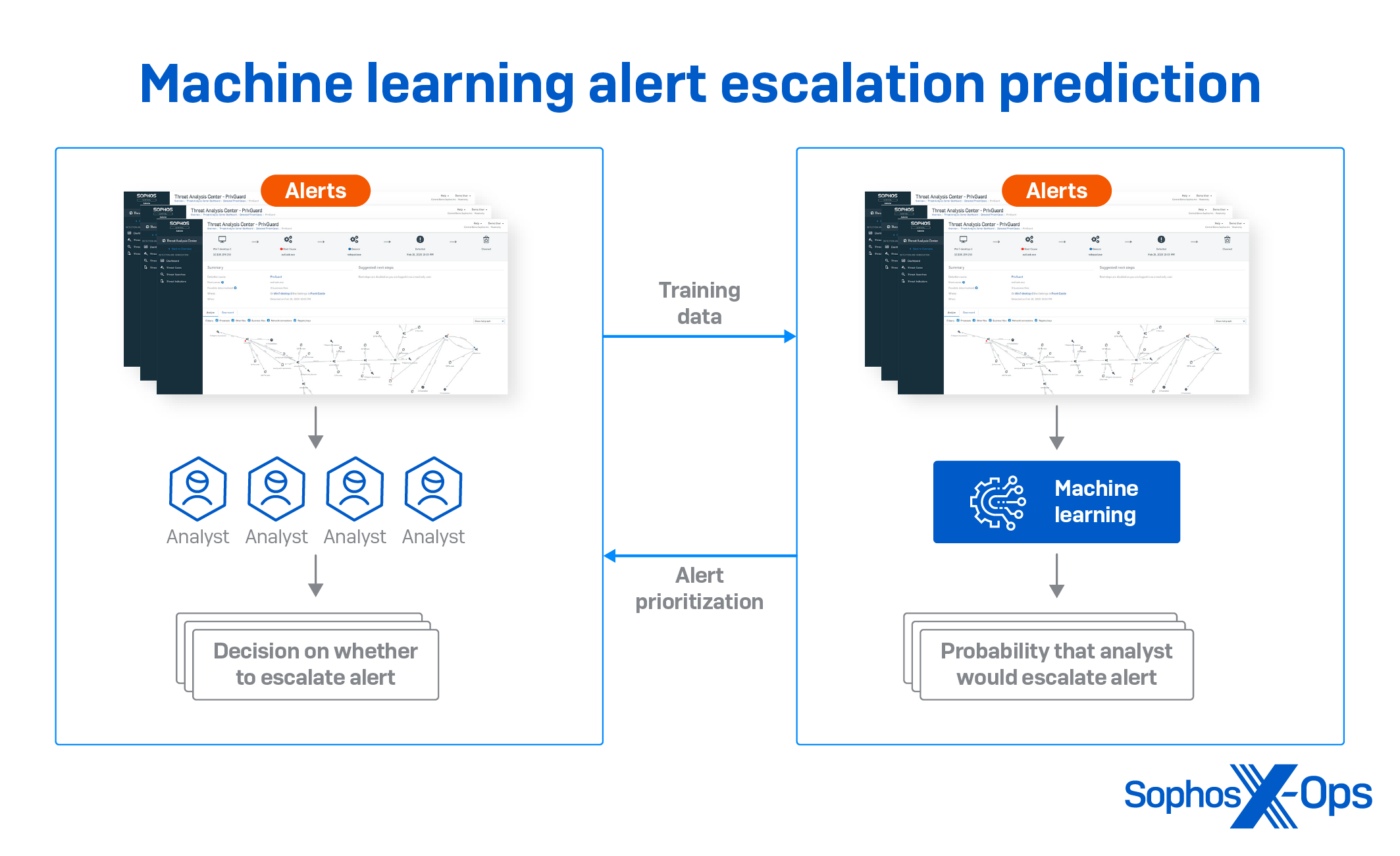

Sophos AI is building and iteratively improving a prototype for predicting which alerts analysts will deem relevant. The setup for alert escalation prediction is given in Figure 5. The panel on the left of the figure shows the workflow SOC analysts engage as they triage alerts, deciding which alerts to discard as false positives and which to escalate as worthy of follow-up. The panel on the right shows how machine learning, in our experiments, learns to mimic and predict analysts’ decisions such that we prioritize alerts that they are most likely to escalate, so they can address them first.

Figure 5: Sophos AI’s prototype alert recommendation engine

We built our prototype using internal data from Sophos’ MTR (Managed Threat Response) service operating across (at the time) approximately 4,000 unique customer environments. Our evaluation results are shown in Table 5, which shows a simulated bake-off between a scenario in which we don’t use ML alert ranking (i.e., randomly presenting alerts exceeding a certain severity threshold to analysts) and one in which we do use ML.

The impact of machine learning here is dramatic. Of the first fifty cases the analysts who do use ML ordering examined, they escalated 41; whereas of the first 50 cases the analysts who didn’t use ML ordering examined, they escalated only four. The results are clear: a machine learning alert ranking engine trained on a feed of SOC analyst decision making data can substantially improve SOC analysts’ efficacy, even on alerts produced by a mature traditional generation logic.

| Random sorting (similar to real world, today) | Percent of escalated cases reached by sorting on ML suspicion score |

|---|---|

| Examine 50 cases to discover 4 escalations | Examine 50 cases to discover 41 escalations |

| Examine 184 cases to discover 13 escalations | Examine 184 cases to discover 92 escalations |

| Examine 731 cases to discover 51 escalations | Examine 731 cases to discover 146 escalations |

Table 5: Results from Sophos AI’s case escalation prototype

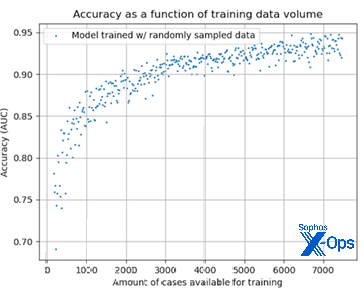

Figure 6 shows the results of an experiment designed to show how the accuracy of our alert escalation prediction prototype increases as a function of training data volume. In the experiment, we randomly sampled training data, increasing the sample size until we reached 7500 data points, and running 500 experiments in total. As the figure shows, as our training data volume increased, the accuracy of our system also increased. These results suggest that as we scale alert escalation prediction, our results will continue to improve.

Figure 6: Accuracy improvements in case escalation prediction as training data size increases

Google-like natural language questions and answering using GPT-3 scale language models

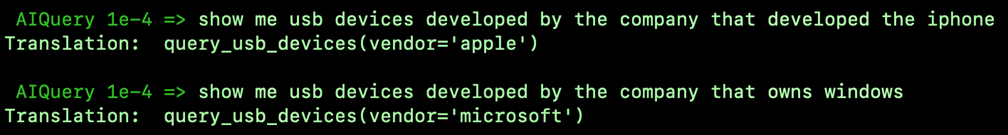

In addition to building a recommendation engine for security alerts, Sophos AI has taken important steps towards building a natural-language question answering system for security. The strategy we have found gives the best results leverages very large language models, pretrained on web-scale text datasets. We’ve found that we can “fine tune” such large models to handle the problem of translating from diverse natural languages (such as English, French, and Chinese) to a domain-specific security search language we’ve developed.

Figure 7 demonstrates how our prototype, called AIQuery, translates between unstructured, free-form queries like “show me usb devices developed by the company that developed the iphone,” and a domain specific search language we’ve defined for querying a database of security-relevant data (query_usb_devices(vendor=’apple’)). Remarkably, AIQuery has learned that a reference to “devices developed by the company that developed the iphone” should be translated to “apple” in the context of a structured database query, and that a reference to “developed by the company that owns windows” should be translated to Microsoft.

Figure 7: Examples of translations from Sophos’ natural language query interface

We expect that technologies like our AIQuery natural language interface will make answering security-relevant questions about complex network topologies dramatically easier by answering the majority of user questions without requiring that they navigate complicated menuing systems or write complex queries in languages like SQL. This will free users up so that they can focus on the truly hard information retrieval problems in security that will require writing custom query code.

AI-UX value circuit-based living-off-the-land detection

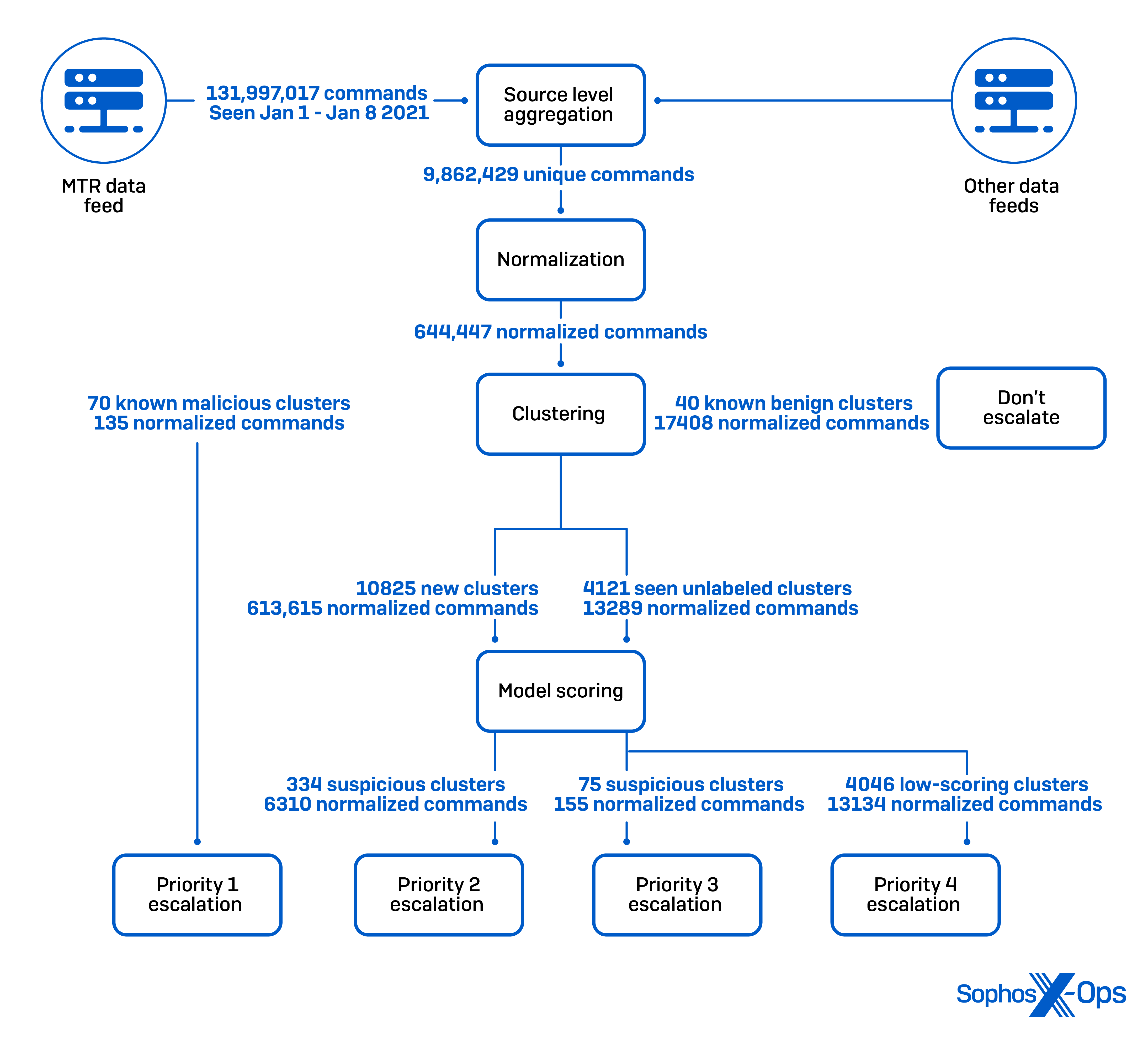

A third prong of Sophos’ research investments in building the AI-assisted SOC focuses on detecting living-off-the-land (also known as ‘fileless’) adversary behavior based on a real-time human/ML feedback loop. In this research, we present novel, suspicious clusters to SOC analysts, who label clusters as either benign or suspicious.

When new behaviors appear that match clusters previously labeled benign by a quorum of analysts, we dismiss them, whereas when new behaviors form new clusters, or appear suspicious to our machine learning model, we present them to analysts for a decision. As quora of analysts label clusters ‘malicious’, new behaviors that match these clusters are prioritized for review above all other behaviors.

Figure 8: The architecture diagram for our AI-UX value circuit living-off-the-land detection model

Figure 8 depicts our prototype’s workflow. While a complete description of this prototype is beyond our present scope, of note is the reduction of 132 million unique behavioral observations to 334 high–priority escalations. By combining clustering with suspicion scoring and anomaly detection, and then incorporating analyst feedback to improve detection accuracy, our prototype dramatically reduces analyst workload and allows analysts to hone in on truly interesting events amidst an intractable data deluge.

Conclusion

This whitepaper argues that the evolution of user interfaces points towards a seamless and sophisticated integration of AI-models with user intent, that the most sophisticated areas of tech have already achieved this, and that in the next 5 years, SOC software product vendors will either achieve this within security or become increasingly irrelevant. In effect, we will achieve a “security operations recommendation engine” that rivals the utility we’ve come to expect from Google, Amazon, and Netflix. We’ve shown the following:

- XDR platforms, with their per-customer warehousing of the broad spectrum of security data into cloud data stores, increasingly provide the necessary data to train the necessary AI models. Differentiated XDR platforms will not only enable security operations but will serve as the very environment in which these AI models can be trained and continuously honed.

- The dizzying secular innovation occurring within AI, including algorithmic innovation, special purpose AI hardware, and rapidly improving AI open-source tools, will be an enabling factor for developing the requisite security AI models. The difference between frivolous and unquantifiable claims of AI in security and useful AI assistance will become as clear as the difference between search engine results from Altavista in 1995 and Google in 2022.

- The development of “APIs everywhere” with respect to the configuration of IaaS, SaaS, and security products means that looking ahead, autonomous agents will have the ability to update organizations’ security posture, facilitating human-supervised AI management of network environments. Security operation platforms that fail to embrace the “Everything as Code” movement will be short-lived.

- Our research gives early indication that the achievement of our vision of the AI-assisted SOC is achievable on a five-year timeline. None of our argument should be taken to suggest that our vision will be easy to achieve, that it will be risk-free even if we execute well on our goals, or that, even if we do achieve our goals, security will be a “solved problem.” But we do believe that as network defenders and security product, platform, and service developers, it is our moral mission to continuously improve, and that this is the path to doing so.

For more information on Sophos X-Ops, please see our FAQ.

Acknowledgements

Thanks to Joe Levy for his leadership in helping to define this vision for his feedback on this blog post; thanks also to Greg Iddon for his insights into the relationship between AI developments in fields outside of security and the path forward for AI within security, and for his authorship of an early version of this document.

Leave a Reply